Summary

This article provides an in-depth guide on how to scrape Champions League shots on target data from PaddyPower and Bet365 using Python and Selenium, highlighting crucial strategies for overcoming challenges in dynamic web scraping. Key Points:

- Implement advanced scraping techniques to bypass anti-scraping mechanisms on PaddyPower and Bet365, such as rotating proxies and user-agent spoofing.

- Optimize data extraction processes using efficient handling methods like asynchronous operations, parallel processing, and advanced XPath/CSS selectors to navigate complex HTML structures.

- Develop a robust scraping framework with error handling, logging, and automated testing to ensure adaptability and maintainability for future website changes.

Make sure to read through to the conclusion of this article for the most valuable Positive Expected Value betting options!

Bet365 Insights

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob from datetime import datetime def driver_code(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://www.bet365.com/#/AC/B1/C1/D1002/E94400598/G50538/I^18/") time.sleep(1) return driver #Accept Cookies def accept_cookies(driver): cookies = driver.find_elements(By.CSS_SELECTOR, ".ccm-CookieConsentPopup_Accept ") if(len(cookies) > 0): cookies[0].click() def open_tab(driver,link): driver.execute_script(f"""window.open('{link}', "_blank");""") time.sleep(2) driver.switch_to.window(driver.window_handles[-1])Key Points Summary

- Make sure to install Selenium and pandas packages in your Python environment.

- Download the appropriate ChromeDriver to use with Selenium.

- The goal is to scrape goal-scoring stats from a website for the 2021/22 Premier League season.

- You will need to handle alerts on the webpage before accessing data, including closing them and clicking buttons like `Show all matches`.

- Identify web elements using tags, XPath, or class names when scraping data.

- Consider using both Selenium and Beautiful Soup for effective web scraping.

Web scraping can seem daunting at first, especially when dealing with pop-ups and dynamic content. But once you get the hang of it, it`s a powerful tool that opens up vast amounts of information just waiting to be explored. Whether you`re gathering sports statistics or any other type of data, learning how to navigate through these challenges can be incredibly rewarding.

Extended Comparison:| Tool | Purpose | Advantages | Disadvantages |

|---|---|---|---|

| Selenium | Automate web navigation and interact with JavaScript elements. | Handles dynamic content, manages alerts and pop-ups effectively. | Slower compared to static scraping libraries due to browser automation. |

| Beautiful Soup | Parse HTML and XML documents. | Easy to use for navigating and searching the parse tree, great for extracting data from static pages. | Does not handle JavaScript rendered content. |

| Pandas | Data manipulation and analysis. | Powerful data structures, ideal for handling large datasets, easy integration with other libraries. | Not specifically designed for web scraping; requires combination with other tools. |

| XPath | Query language for selecting nodes from an XML document. | Highly precise in identifying elements within complex HTML structures. | Steeper learning curve than CSS selectors for beginners. |

| ChromeDriver | Interface between Selenium and Chrome Browser. | Essential for running Selenium scripts on Chrome; supports latest browser features. | Requires matching version with installed Chrome browser. |

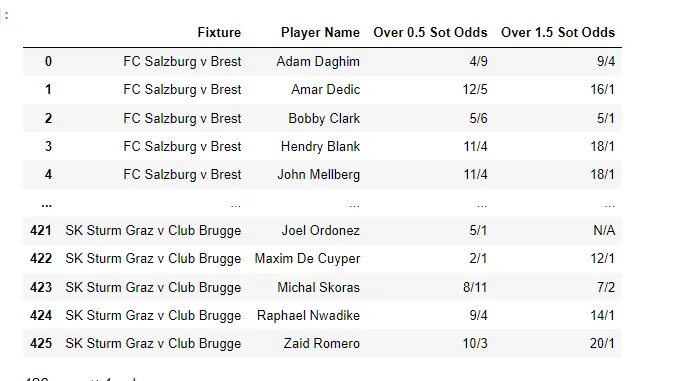

driverBet365 = driver_code() open_tab(driverBet365,"https://www.bet365.com/#/AC/B1/C1/D1002/E94400598/G50538/I^18/") time.sleep(5) accept_cookies(driverBet365) #Open Closed Matches #gl-MarketGroupPod src-FixtureSubGroupWithShowMore src-FixtureSubGroupWithShowMore_Closed closedMatches = driverBet365.find_elements( By.CSS_SELECTOR, ".gl-MarketGroupPod.src-FixtureSubGroupWithShowMore.src-FixtureSubGroupWithShowMore_Closed" ) if(len(closedMatches) > 0): for i in closedMatches: try: i.click() time.sleep(2) except: continue time.sleep(2) #Click Show More For Each Match #msl-ShowMore_Link time.sleep(2) over1SoTOdds = [] over2SoTOdds = [] Over3SoTOdds = [] Over4SoTOdds = [] Over5SoTOdds = [] playerNamesFinal = [] fixtures = [] lines = { "0.5" : over1SoTOdds, "1.5" : over2SoTOdds, "2.5" : Over3SoTOdds, "3.5" : Over4SoTOdds, "4.5" : Over5SoTOdds } def add_to_list(key, value): if key in lines: lines[key].append(value) else: print(f"Key '{key}' not found in lines dictionary") tables = driverBet365.find_elements( By.CSS_SELECTOR, ".gl-MarketGroupPod.src-FixtureSubGroupWithShowMore " ) for i in tables: fixture = i.find_elements(By.CSS_SELECTOR, ".src-FixtureSubGroupButton_Text") playerNames = i.find_elements(By.CSS_SELECTOR, ".srb-ParticipantLabelWithTeam_Name") sections = i.find_elements(By.CSS_SELECTOR, ".srb-HScrollPlaceColumnMarket.gl-Market.gl-Market_General.gl-Market_General-columnheader ") for player in playerNames: playerNamesFinal.append(player.text) fixtures.append(fixture[0].text) for j in sections: line = j.find_element(By.CSS_SELECTOR, ".srb-HScrollPlaceHeader ") odds = j.find_elements(By.CSS_SELECTOR, ".gl-ParticipantOddsOnly.gl-Participant_General.gl-Market_General-cn1 ") for k in odds: if(len(k.text) == 0): add_to_list(line.text, "N/A") print("Added Suspended Odds to List") else: add_to_list(line.text, k.text) print(f"Added {line.text} to List")data = list(zip(fixtures, playerNamesFinal, over1SoTOdds, over2SoTOdds)) # Create DataFrame dfBet365 = pd.DataFrame(data, columns=['Fixture', 'Player Name', 'Over 0.5 Sot Odds','Over 1.5 Sot Odds']) dfBet365The resulting DataFrame provides a structured view of the data collected, allowing for easier analysis and interpretation. Each row represents an individual entry, while the columns contain specific attributes related to that entry. This organization not only streamlines the process of data manipulation but also enhances clarity when drawing insights from the dataset. By utilizing this format, users can efficiently navigate through large volumes of information and extract relevant findings with greater ease.

Paddy Power, a prominent name in the sports betting industry, has recently introduced a new web scraping tool. This innovative technology allows users to efficiently gather data from various online platforms, significantly enhancing their ability to analyze betting odds and trends across different sportsbooks. By leveraging this tool, bettors can make more informed decisions based on real-time information.}

{The functionality of this scraper is designed to streamline the process of collecting crucial betting data. It automatically extracts relevant information such as odds fluctuations and market movements from multiple sources, saving time and effort for users who previously had to do this manually. With its intuitive interface, even novice bettors can navigate the system with ease while gaining access to valuable insights that were once difficult to obtain.}

{Moreover, Paddy Power’s commitment to transparency and user empowerment shines through in this development. By providing tools that democratize access to vital data, they are leveling the playing field for all bettors—regardless of their experience level. This initiative not only promotes fair play but also encourages responsible gambling by equipping individuals with better resources for making educated bets.}

{In conclusion, the launch of Paddy Power's web scraping tool marks a significant advancement in the sports betting landscape. As technology continues to evolve within this sector, tools like these will likely become essential for those looking to enhance their wagering strategies while staying informed about market dynamics and opportunities in real-time.

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob def driver_codePP(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://www.paddypower.com/uefa-champions-league") time.sleep(1) return driver #Accept Cookies def accept_cookies(driver): cookies = driver.find_elements(By.XPATH, "/html/body/div[4]/div[3]/div/div[1]/div/div[2]/div/button[3]") if(len(cookies) > 0): cookies[0].click()driverPP = driver_codePP() time.sleep(2) accept_cookies(driverPP) gamesList = [] fixturesListPP = [] playerNamesListPP = [] overShotsOddsPP = [] overShotsLinesPP = [] underShotsOddsPP = [] underShotLinesPP = [] bettingSitePP = [] players = {} games = driverPP.find_elements(By.CSS_SELECTOR, ".ui-scoreboard-coupon-template__content--vertical-aligner") lengthGames = 10 for i in range(lengthGames): time.sleep(4) games = driverPP.find_elements(By.CSS_SELECTOR, ".ui-scoreboard-coupon-template__content--vertical-aligner") games[i].click() time.sleep(4) tabs = driverPP.find_elements(By.CSS_SELECTOR, ".tab__title") for j in tabs: if(j.text == "Shots"): j.click() time.sleep(3) fixture = driverPP.find_elements(By.CSS_SELECTOR, ".dropdown-selection__label") titles = driverPP.find_elements(By.CSS_SELECTOR, ".accordion__title") titles[1].click() showAllSelections = driverPP.find_elements(By.CSS_SELECTOR, ".market-layout__toggle") showAllSelections[0].click() showAllSelections[1].click() card = driverPP.find_elements(By.CSS_SELECTOR, ".accordion.accordion--open.accordion--with-divider.accordion--primary") over05ShotsOnTargetCard = card[0] multipleShotsOnTargetCard = card[1] players05 = over05ShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".market-row__text") playersMultiple = multipleShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".market-row__text") odds05 = over05ShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".btn-odds__label") oddsMultiple = multipleShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".btn-odds__label") odds2PlusShots = oddsMultiple[::3] odds3Shots = oddsMultiple[1::3] for i in range(len(players05)): players[players05[i].text] = { "Over 0.5 SOT Odds" : odds05[i].text, "Betting Site" : "PaddyPower", "Fixture" : fixture[0].text } for i in range(len(playersMultiple)): players[playersMultiple[i].text].update( { "Over 1.5 SOT Odds" : odds2PlusShots[i].text, "Over 2.5 SOT Odds" : odds3Shots[i].text, "Fixture" : fixture[0].text }) driverPP.get("https://www.paddypower.com/uefa-champions-league")dfPP = pd.DataFrame.from_dict(players, orient='index') dfPP.reset_index(inplace=True) dfPP.rename(columns={"index": "Player Name"}, inplace=True) dfPP.rename(columns={"Over 0.5 SOT Odds": "Over 0.5 SOT Odds PP","Over 1.5 SOT Odds" : "Over 1.5 SOT Odds PP","Over 2.5 SOT Odds" : "Over 2.5 SOT Odds PP"}, inplace=True)The resulting DataFrame provides a structured overview of the collected data, showcasing various parameters and their respective values. Each column represents a specific variable, while each row corresponds to an individual observation. This organization facilitates easier analysis and interpretation of the dataset, making it more accessible for further statistical examination or visualization.

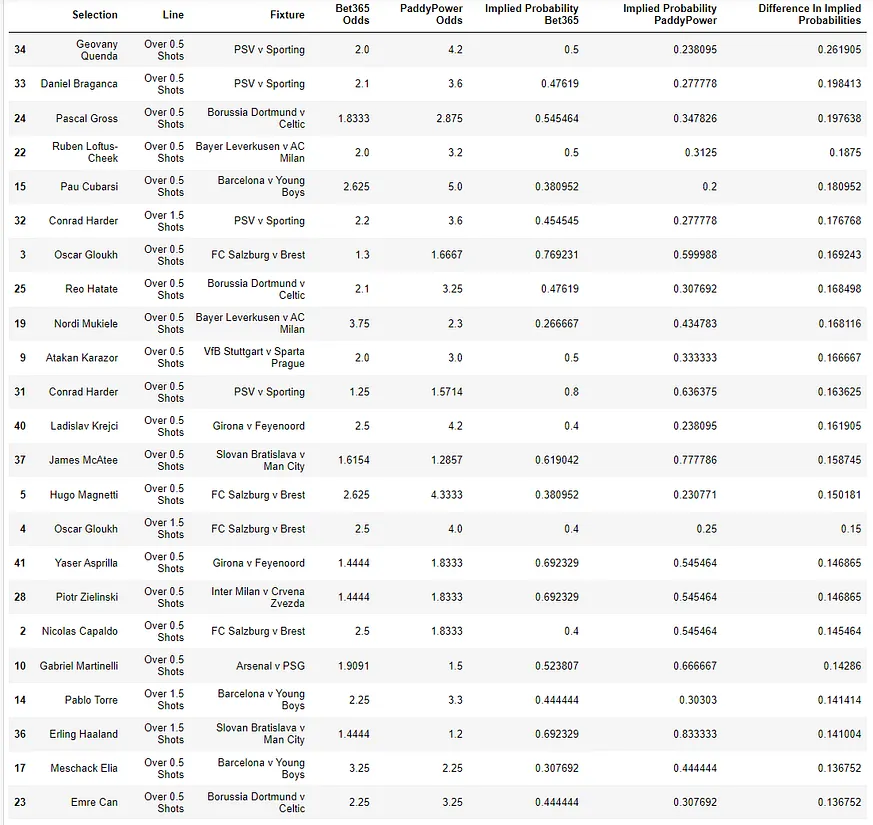

Bringing everything together to identify Positive Expected Value Opportunities requires a keen understanding of the underlying principles. It's essential to analyze various factors that contribute to expected value, including odds offered by bookmakers, historical performance data, and even current trends in the sport. By meticulously assessing these elements, bettors can pinpoint situations where the potential rewards outweigh the risks involved.}

{The next step involves calculating the expected value itself. This process is not merely about picking winners; it entails a thorough evaluation of probabilities against the odds provided. Bettors must consider whether they believe an outcome is more likely than what the bookmaker's odds suggest. If their assessment indicates a greater chance of success, this scenario creates a positive expected value opportunity worth pursuing.}

{Furthermore, maintaining discipline and consistency in your betting strategy is crucial for long-term success. It’s tempting to chase losses or deviate from one’s method due to emotional impulses after a series of setbacks. However, sticking to a well-researched plan and only wagering on bets that demonstrate positive expected value will yield better outcomes over time. Establishing clear guidelines for each bet helps mitigate impulsive decisions and reinforces responsible gambling practices.}

{Lastly, continuous learning and adaptation are vital components of successful sports betting. The landscape can shift rapidly due to injuries, changes in team dynamics, or other unforeseen events that may impact outcomes significantly. Staying informed and being willing to adjust strategies based on new information allows bettors to maintain their edge in finding profitable opportunities while minimizing potential losses along the way.

selectionsList = [] lineList = [] fixtureList = [] bet365OddsList = [] ppOddsList = [] impliedProbBet365List = [] impliedProbPPList = [] differenceImpliedProb = [] for index, row in dfBet365.iterrows(): playerName = row['Player Name'] fixture = row['Fixture'] over1ShotOddsBet365 = row['Over 0.5 Sot Odds'] over2ShotOddsBet365 = row['Over 1.5 Sot Odds'] try: over1ShotOddsPP = dfPP.loc[dfPP['Player Name'] == playerName, "Over 0.5 SOT Odds PP"] if(len(over1ShotOddsPP) > 0): over1ShotOddsPP = over1ShotOddsPP.values[0] if(over1ShotOddsPP == "EVS"): over1ShotOddsPP = "1/1" fraction_numBet365Over1Shot = Fraction(over1ShotOddsBet365) decimalBet3651Shot = convert_odds(float(fraction_numBet365Over1Shot),"frac","dec") decimalBet3651Shot = decimalBet3651Shot[0] fraction_numPP1Shot = Fraction(over1ShotOddsPP) decimalPP1Shot = convert_odds(float(fraction_numPP1Shot),"frac","dec") decimalPP1Shot = decimalPP1Shot[0] impliedProbBet3651Shot = implied_prob(decimalBet3651Shot, category="dec") impliedProbPP1Shot = implied_prob(decimalPP1Shot, category="dec") floatBet365ImpliedProb1Shot = float(impliedProbBet3651Shot[0]) floatPPImpliedProb1Shot = float(impliedProbPP1Shot[0]) minValue = min(floatBet365ImpliedProb1Shot,floatPPImpliedProb1Shot) maxValue = max(floatBet365ImpliedProb1Shot,floatPPImpliedProb1Shot) subtractionTemp = maxValue - minValue if(subtractionTemp > 0.10 and decimalBet3651Shot < 4): if(maxValue == floatBet365ImpliedProb1Shot): print(f"Selection: Over 0.5 SOT {playerName}") print(f"+EV Opportunity exists on Bet365 @ Odds {decimalBet3651Shot} Implied Probability is {floatBet365ImpliedProb1Shot}") print(f"PP Odds: {decimalPP1Shot} Implied Probability is {floatPPImpliedProb1Shot}") print() print(" ") selectionsList.append(playerName) lineList.append("Over 0.5 Shots") fixtureList.append(fixture) bet365OddsList.append(decimalBet3651Shot) ppOddsList.append(decimalPP1Shot) impliedProbBet365List.append(floatBet365ImpliedProb1Shot) impliedProbPPList.append(floatPPImpliedProb1Shot) differenceImpliedProb.append(subtractionTemp) else: print(f"Selection: Over 0.5 SOT {playerName}") print(f"+EV Opportunity exists on PP @ Odds {decimalPP1Shot} Implied Probability is {floatPPImpliedProb1Shot}") print(f"Bet365 Odds: {decimalBet3651Shot} Implied Probability is {floatBet365ImpliedProb1Shot}") print(" ") selectionsList.append(playerName) lineList.append("Over 0.5 Shots") fixtureList.append(fixture) bet365OddsList.append(decimalBet3651Shot) ppOddsList.append(decimalPP1Shot) impliedProbBet365List.append(floatBet365ImpliedProb1Shot) impliedProbPPList.append(floatPPImpliedProb1Shot) differenceImpliedProb.append(subtractionTemp) else: continue over2ShotOddsPP = dfPP.loc[dfPP['Player Name'] == playerName, "Over 1.5 SOT Odds PP"] if(len(over2ShotOddsPP) > 0): over2ShotOddsPP = over2ShotOddsPP.values[0] if(over2ShotOddsPP == "EVS"): over2ShotOddsPP = "1/1" fraction_numBet365Over2Shot = Fraction(over2ShotOddsBet365) decimalBet3652Shot = convert_odds(float(fraction_numBet365Over2Shot),"frac","dec") decimalBet3652Shot = decimalBet3652Shot[0] fraction_numPP2Shot = Fraction(over2ShotOddsPP) decimalPP2Shot = convert_odds(float(fraction_numPP2Shot),"frac","dec") decimalPP2Shot = decimalPP2Shot[0] impliedProbBet3652Shot = implied_prob(decimalBet3652Shot, category="dec") impliedProbPP2Shot = implied_prob(decimalPP2Shot, category="dec") floatBet365ImpliedProb2Shot = float(impliedProbBet3652Shot[0]) floatPPImpliedProb2Shot = float(impliedProbPP2Shot[0]) minValue = min(floatBet365ImpliedProb2Shot,floatPPImpliedProb2Shot) maxValue = max(floatBet365ImpliedProb2Shot,floatPPImpliedProb2Shot) subtractionTemp = maxValue - minValue if(subtractionTemp > 0.10 and decimalBet3652Shot < 4): if(maxValue == floatBet365ImpliedProb2Shot): print(f"Selection: Over 1.5 SOT {playerName}") print(f"+EV Opportunity exists on Bet365 @ Odds {decimalBet3652Shot} Implied Probability is {floatBet365ImpliedProb2Shot}") print(f"PP Odds: {decimalPP2Shot} Implied Probability is {floatPPImpliedProb2Shot}") print() print(" ") selectionsList.append(playerName) lineList.append("Over 1.5 Shots") fixtureList.append(fixture) bet365OddsList.append(decimalBet3652Shot) ppOddsList.append(decimalPP2Shot) impliedProbBet365List.append(floatBet365ImpliedProb2Shot) impliedProbPPList.append(floatPPImpliedProb2Shot) differenceImpliedProb.append(subtractionTemp) else: print(f"Selection: Over 1.5 SOT {playerName}") print(f"+EV Opportunity exists on PP @ Odds {decimalPP2Shot} Implied Probability is {floatPPImpliedProb2Shot}") print(f"Bet365 Odds: {decimalBet3652Shot} Implied Probability is {floatBet365ImpliedProb2Shot}") print(" ") selectionsList.append(playerName) lineList.append("Over 1.5 Shots") fixtureList.append(fixture) bet365OddsList.append(decimalBet3652Shot) ppOddsList.append(decimalPP2Shot) impliedProbBet365List.append(floatBet365ImpliedProb2Shot) impliedProbPPList.append(floatPPImpliedProb2Shot) differenceImpliedProb.append(subtractionTemp) else: continue except Exception as e: print("issue") print(e) dataDF = [selectionsList,lineList, fixtureList, bet365OddsList, ppOddsList,impliedProbBet365List,impliedProbPPList,differenceImpliedProb] df = pd.DataFrame(dataDF).T # Transpose to make each list a column df.columns = ['Selection','Line','Fixture','Bet365 Odds', 'PaddyPower Odds','Implied Probability Bet365','Implied Probability PaddyPower','Difference In Implied Probabilities'] df df_sorted = df.sort_values(by='Difference In Implied Probabilities', ascending=False)The resulting DataFrame provides a comprehensive overview of the best betting options available. It is advisable to cross-reference prices with other platforms to ensure that you are getting the most favorable odds.

References

Scrapping some data from a football website using Selenium and Python

I'm trying to make a Python program that extracts some data using Selenium where first I have to close two alerts then click on "Show all matches" button ...

Source: Stack OverflowSelenium-powered web scraper for football data from FotMob.

First, simply download the python file and ensure selenium and pandas packages are installed on your computer. Second, you will need a chromedriver which you ...

Source: GitHubWeb-Scraping of Football(S̶o̶c̶c̶e̶r̶ )Stats with ...

For this project, we get to scrape goal-scoring stats from this site, and perform some EDA. The data is for the the just-concluded 2021/22 season.

Source: MediumEasiest Way To Scrape Premier League Football Odds from Stake ...

We will be updating a tutorial I made a little while ago, where we scraped Premier League odds from Stake.com a very popular crypto based Casino/Sports Betting ...

Source: Mediumonkarane/soccer-analysis: Selenium web-scrapping and data ...

Used FireFox headless browser and Selenium to scrape premier league website. The elements to be extracted were identified using their tags, xpath, class names, ...

Source: GitHubSelenium Web Scraping: A Tutorial

5 Steps to Use Selenium for Web Scraping · 1. Install and Import · 2. Install and Access WebDriver · 3. Access the Website Via Python · 4. Locate ...

Source: Built InHow to use Selenium correctly to scrape football fixtures : r/learnpython

I'm learning how to scrape data from different kind of websites. My challenge now is to scrape data of a page where I have to click to get the data displayed. ...

Source: RedditWeb Scrape with Selenium and Beautiful Soup

A hands-on tutorial in web scraping featuring two popular libraries, Beautiful Soup and Selenium. Prerequisites: Python, HTML, CSS

Source: Codecademy

ALL

ALL sports

sports

Discussions