Summary

This article provides a comprehensive guide on building your own odds scraper for sports betting, emphasizing the importance of leveraging technology to gain a competitive edge. Key Points:

- Utilize advanced web scraping techniques, such as Selenium or Playwright, to extract dynamic odds from sportsbooks while bypassing anti-scraping measures.

- Integrate multiple sportsbook APIs for real-time odds comparison and identify high-probability arbitrage opportunities while managing rate limits and errors.

- Implement machine learning models to predict future odds movements, enhancing expected value calculations by factoring in team performance, injuries, and other variables.

Yesterday's update: A detailed code review.

Make sure to update the URL and key for your Supabase project here.

API_URL = 'SUPABASE PROJECT URL' API_KEY = 'SUPABASE PROJECT KEY' supabase = create_client(API_URL, API_KEY) supabaseKey Points Summary

- Choose the page you want to scrape and inspect the website code.

- Identify the specific data you wish to extract from the HTML.

- Import necessary libraries like Beautiful Soup for Python or Colly for Go.

- Request the HTML content using a library like Requests in Python.

- Parse the relevant data and store it in a structured format, such as CSV.

- Monitor various online retailers` websites by regularly running your scraper.

Building a web scraper might seem daunting at first, but it`s an incredibly rewarding process. With just a few steps, you can gather information from your favorite websites without having to manually search through endless pages. Whether you`re tracking deals on Amazon UK or gathering product information from other retailers, creating your own scraper gives you control over what data you collect and how often. It`s like having a personal assistant that does all the tedious work for you!

Extended Comparison:| Step | Description | Tools/Libraries | Purpose |

|---|---|---|---|

| 1 | Choose the page you want to scrape and inspect the website code. | Browser Developer Tools | Identify the structure of data. |

| 2 | Identify the specific data you wish to extract from the HTML. | Focus on odds, teams, and match details. | |

| 3 | Import necessary libraries like Beautiful Soup for Python or Colly for Go. | Beautiful Soup, Colly | Facilitate HTML parsing. |

| 4 | Request the HTML content using a library like Requests in Python. | Requests (Python) | Fetch webpage content. |

| 5 | Parse the relevant data and store it in a structured format, such as CSV. | Organize data for analysis. | |

| 6 | Monitor various online retailers' websites by regularly running your scraper. | Ensure up-to-date information. |

The Bet365 scraper has received an update, enhancing its functionality and performance. This revised version aims to provide users with a more efficient tool for extracting betting information from the platform with greater accuracy and speed. Users can expect improved navigation and a more intuitive interface that simplifies the overall betting experience.}

{In addition to these enhancements, this update introduces new features designed to keep pace with the evolving landscape of sports betting. Users can now access real-time data feeds, ensuring they have up-to-the-minute information at their fingertips when making crucial betting decisions. Furthermore, the updated scraper is equipped to handle larger volumes of data, making it ideal for serious bettors who require comprehensive insights into various markets.}

{Security measures have also been bolstered in this latest iteration of the Bet365 scraper. The developers have implemented advanced encryption protocols to protect user data and ensure safe transactions while scraping information from the platform. As online security remains a top concern for many users, these improvements help foster trust and reliability in using the tool.}

{Overall, this update signifies a significant step forward for those engaged in sports betting analysis. By combining enhanced functionality with robust security features, users are better positioned to navigate the complexities of online wagering effectively. Whether you are a seasoned bettor or just starting out, this revamped scraper promises to elevate your sports betting experience significantly.

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob from datetime import datetime def driver_code(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://www.bet365.com/#/AC/B1/C1/D1002/E91422157/G50538/") time.sleep(1) return driver #Accept Cookies def accept_cookies(driver): cookies = driver.find_elements(By.CSS_SELECTOR, ".ccm-CookieConsentPopup_Accept ") if(len(cookies) > 0): cookies[0].click() def open_tab(driver,link): driver.execute_script(f"""window.open('{link}', "_blank");""") time.sleep(2) driver.switch_to.window(driver.window_handles[-1]) driverBet365 = driver_code() open_tab(driverBet365,"https://www.bet365.com/#/AC/B1/C1/D1002/E91422157/G50538/") time.sleep(2) accept_cookies(driverBet365) #Open Closed Matches #gl-MarketGroupPod src-FixtureSubGroupWithShowMore src-FixtureSubGroupWithShowMore_Closed closedMatches = driverBet365.find_elements( By.CSS_SELECTOR, ".gl-MarketGroupPod.src-FixtureSubGroupWithShowMore.src-FixtureSubGroupWithShowMore_Closed" ) if(len(closedMatches) > 0): for i in closedMatches: try: i.click() time.sleep(2) except: continue time.sleep(2) #Click Show More For Each Match #msl-ShowMore_Link time.sleep(2) over1SoTOdds = [] over2SoTOdds = [] Over3SoTOdds = [] Over4SoTOdds = [] Over5SoTOdds = [] playerNamesFinal = [] fixtures = [] lines = { "0.5" : over1SoTOdds, "1.5" : over2SoTOdds, "2.5" : Over3SoTOdds, "3.5" : Over4SoTOdds, "4.5" : Over5SoTOdds } def add_to_list(key, value): if key in lines: lines[key].append(value) else: print(f"Key '{key}' not found in lines dictionary") tables = driverBet365.find_elements( By.CSS_SELECTOR, ".gl-MarketGroupPod.src-FixtureSubGroupWithShowMore " ) for i in tables: fixture = i.find_elements(By.CSS_SELECTOR, ".src-FixtureSubGroupButton_Text") playerNames = i.find_elements(By.CSS_SELECTOR, ".srb-ParticipantLabelWithTeam_Name") sections = i.find_elements(By.CSS_SELECTOR, ".srb-HScrollPlaceColumnMarket.gl-Market.gl-Market_General.gl-Market_General-columnheader ") for player in playerNames: playerNamesFinal.append(player.text) fixtures.append(fixture[0].text) for j in sections: line = j.find_element(By.CSS_SELECTOR, ".srb-HScrollPlaceHeader ") odds = j.find_elements(By.CSS_SELECTOR, ".gl-ParticipantOddsOnly.gl-Participant_General.gl-Market_General-cn1 ") for k in odds: if(len(k.text) == 0): add_to_list(line.text, "N/A") print("Added Suspended Odds to List") else: add_to_list(line.text, k.text) print(f"Added {line.text} to List") API_URL = 'SUPABASE PROJECT URL' API_KEY = 'SUPABASE PROJECT KEY' supabase = create_client(API_URL, API_KEY) supabase for i in range(len(playerNamesFinal)): playerName = playerNamesFinal[i] fixture = fixtures[i] odds = over1SoTOdds[i] currentTime = datetime.today().strftime('%Y-%m-%d %H:%M:%S') try: response = supabase.table('SoTBet365').insert({"fixture":fixture, "playerName": playerName, "selection": "Over 0.5 Shots On Target", "odds": odds, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: Over 0.5 Shots On Target Odds: {odds}") except Exception as e: print(e) for i in range(len(playerNamesFinal)): playerName = playerNamesFinal[i] fixture = fixtures[i] odds = over2SoTOdds[i] currentTime = datetime.today().strftime('%Y-%m-%d %H:%M:%S') try: response = supabase.table('SoTBet365').insert({"fixture":fixture, "playerName": playerName, "selection": "Over 1.5 Shots On Target", "odds": odds, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: Over 0.5 Shots On Target Odds: {odds}") except Exception as e: print(e)PaddyPower Scraper Updated

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob def driver_codePP(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://www.paddypower.com/english-premier-league") time.sleep(1) return driver #Accept Cookies def accept_cookies(driver): cookies = driver.find_elements(By.XPATH, "/html/body/div[4]/div[3]/div/div[1]/div/div[2]/div/button[3]") if(len(cookies) > 0): cookies[0].click() driverPP = driver_codePP() time.sleep(2) accept_cookies(driverPP) gamesList = [] fixturesListPP = [] playerNamesListPP = [] overShotsOddsPP = [] overShotsLinesPP = [] underShotsOddsPP = [] underShotLinesPP = [] bettingSitePP = [] players = {} games = driverPP.find_elements(By.CSS_SELECTOR, ".ui-scoreboard-coupon-template__content--vertical-aligner") lengthGames = 10 for i in range(lengthGames): time.sleep(4) games = driverPP.find_elements(By.CSS_SELECTOR, ".ui-scoreboard-coupon-template__content--vertical-aligner") games[i].click() time.sleep(4) tabs = driverPP.find_elements(By.CSS_SELECTOR, ".tab__title") for j in tabs: if(j.text == "Shots"): j.click() time.sleep(3) fixture = driverPP.find_elements(By.CSS_SELECTOR, ".dropdown-selection__label") titles = driverPP.find_elements(By.CSS_SELECTOR, ".accordion__title") titles[1].click() showAllSelections = driverPP.find_elements(By.CSS_SELECTOR, ".market-layout__toggle") showAllSelections[0].click() showAllSelections[1].click() card = driverPP.find_elements(By.CSS_SELECTOR, ".accordion.accordion--open.accordion--with-divider.accordion--primary") over05ShotsOnTargetCard = card[0] multipleShotsOnTargetCard = card[1] players05 = over05ShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".market-row__text") playersMultiple = multipleShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".market-row__text") odds05 = over05ShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".btn-odds__label") oddsMultiple = multipleShotsOnTargetCard.find_elements(By.CSS_SELECTOR, ".btn-odds__label") odds2PlusShots = oddsMultiple[::3] odds3Shots = oddsMultiple[1::3] for i in range(len(players05)): players[players05[i].text] = { "Over 0.5 SOT Odds" : odds05[i].text, "Betting Site" : "PaddyPower", "Fixture" : fixture[0].text } for i in range(len(playersMultiple)): players[playersMultiple[i].text].update( { "Over 1.5 SOT Odds" : odds2PlusShots[i].text, "Over 2.5 SOT Odds" : odds3Shots[i].text, "Fixture" : fixture[0].text }) driverPP.get("https://www.paddypower.com/english-premier-league") API_URL = 'SUPABASE PROJECT URL' API_KEY = 'SUPABASE PROJECT KEY' supabase = create_client(API_URL, API_KEY) supabase for i in players: playerName = i fixture = players[i]['Fixture'] odds1Over = players[i]['Over 0.5 SOT Odds'] odds2Over = players[i]['Over 1.5 SOT Odds'] currentTime = datetime.today().strftime('%Y-%m-%d %H:%M:%S') try: response = supabase.table('SoTPP').insert({"fixture":fixture, "playerName": playerName, "selection": "Over 0.5 Shots On Target", "odds": odds1Over, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: Over 0.5 Shots On Target Odds: {odds1Over}") except Exception as e: print(e) try: response = supabase.table('SoTPP').insert({"fixture":fixture, "playerName": playerName, "selection": "Over 1.5 Shots On Target", "odds": odds2Over, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: Over 1.5 Shots On Target Odds: {odds2Over}") except Exception as e: print(e)William Hill Scraper

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob def driver_codeWillHill(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://sports.williamhill.com/betting/en-gb/football") time.sleep(1) return driver fixtureNamesFinal = [] playerNameFinal = [] marketNameFinal = [] oddsFinal = [] websiteFinal = [] marketNameDict = {} marketNameDict["At Least 1 Shot On Target"] = "Over 0.5 Shots On Target" marketNameDict["Over 1 Shot On Target"] = "Over 1.5 Shots On Target" marketNameDict["Over 2 Shots On Target"] = "Over 2.5 Shots On Target" marketNameDict["Over 3 Shots On Target"] = "Over 3.5 Shots On Target" driver_WillHill = driver_codeWillHill() #acceptButton promptBtn cookies = driver_WillHill.find_elements(By.CSS_SELECTOR, ".cookie-disclaimer__button") cookies[0].click() time.sleep(2) #acceptButton promptBtn cookies = driver_WillHill.find_elements(By.CSS_SELECTOR, ".acceptButton") cookies[0].click() buttons = driver_WillHill.find_elements(By.CSS_SELECTOR, ".grid.grid--vertical") for i in buttons: if(i.text == "Competitions"): i.click() break time.sleep(2) leagues = driver_WillHill.find_elements(By.CSS_SELECTOR, ".css-18mscgy") for i in leagues: if(i.text == "English Premier League"): i.click() break time.sleep(2) games = driver_WillHill.find_elements(By.CSS_SELECTOR, ".sp-betName--small") gamesLen = len(games) for i in range(gamesLen): games = driver_WillHill.find_elements(By.CSS_SELECTOR, ".sp-betName--small") time.sleep(2) try: games[i].click() except: continue time.sleep(2) try: fixtureName = driver_WillHill.find_elements(By.CSS_SELECTOR, ".css-1e83nb2") fixtureName = fixtureName[0].text except: fixtureName = "N/A" scrapePlayers = False players = driver_WillHill.find_elements(By.CSS_SELECTOR, ".disabled.filter-list__link") for i in players: if(i.text == "Players"): i.click() scrapePlayers = True break time.sleep(2) if(scrapePlayers == True): markets = driver_WillHill.find_elements(By.CSS_SELECTOR, ".header-dropdown.header-dropdown--large") for i in markets: if(i.text == "Player Shots on Target"): i.click() break marketNamesList = [] marketNames = driver_WillHill.find_elements(By.CSS_SELECTOR, ".btmarket__name") for i in marketNames: if(i.text != ""): marketNamesList.append(i.text) oddsList = [] odds = driver_WillHill.find_elements(By.CSS_SELECTOR, ".betbutton__odds") for i in odds: if(i.text != ""): oddsList.append(i.text) playerNames = [] marketNames = [] for i in marketNamesList: splitMarket = i.split(" ") lengthSplitMarket = len(splitMarket) for j in range(lengthSplitMarket): if(splitMarket[j] == "At" or splitMarket[j] == "Over"): playerName = ' '.join(splitMarket[:j]) marketName = ' '.join(splitMarket[j:]) playerNames.append(playerName) marketNames.append(marketName) marketNamesFromDict = [] for i in range(len(playerNames)): fixtureNamesFinal.append(fixtureName) playerNameFinal.append(playerNames[i]) marketNameFinal.append(marketNameDict.get(marketNames[i])) oddsFinal.append(oddsList[i]) websiteFinal.append("william Hill") time.sleep(3) driver_WillHill.get("https://sports.williamhill.com/betting/en-gb/football/competitions/OB_TY295/English-Premier-League/matches/OB_MGMB/Match-Betting") else: time.sleep(3) driver_WillHill.get("https://sports.williamhill.com/betting/en-gb/football/competitions/OB_TY295/English-Premier-League/matches/OB_MGMB/Match-Betting") API_URL = 'SUPABASE PROJECT URL' API_KEY = 'SUPABASE PROJECT KEY' supabase = create_client(API_URL, API_KEY) supabase for i in range(len(playerNameFinal)): playerName = playerNameFinal[i] fixture = fixtureNamesFinal[i] odds = oddsFinal[i] marketName = marketNameFinal[i] currentTime = datetime.today().strftime('%Y-%m-%d %H:%M:%S') try: response = supabase.table('SoTWillHill').insert({"fixture":fixture, "playerName": playerName, "selection": marketName, "odds": odds, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: {marketName} Odds: {odds}") except Exception as e: print(e)The Unibet Scraper has received a significant update, enhancing its functionality and user experience. This upgrade aims to streamline the process of collecting data from various sports betting platforms, allowing users to access vital information more efficiently. With improved algorithms and user-friendly features, bettors can now navigate through odds and statistics with greater ease than ever before.}

{One of the standout improvements includes a more intuitive interface that simplifies data visualization. Users can quickly identify trends and make informed decisions based on real-time updates. The redesign not only enhances usability but also ensures that critical insights are readily available at a glance, empowering bettors to optimize their strategies effectively.}

{Additionally, this latest version boasts faster loading times and increased compatibility across devices, making it accessible for users whether they are on desktop or mobile platforms. The commitment to performance optimization means that bettors can rely on timely information without unnecessary delays—crucial in the fast-paced world of sports betting where every second counts.}

{Moreover, security enhancements have been implemented to protect user data while utilizing the scraper. With rising concerns over privacy in digital environments, this update prioritizes safeguarding personal information against potential threats, thus fostering trust among its users as they engage with online betting markets.

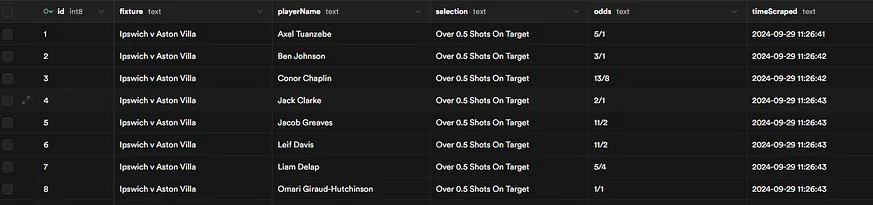

from supabase import create_client import json import pandas as pd from datetime import date import statsmodels import numpy as np import datetime from datetime import datetime import time import pandas as pd import numpy as np import math #The Python math module from scipy import stats #The SciPy stats module import time from selenium import webdriver from selenium.webdriver import ChromeOptions from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.action_chains import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from selenium.webdriver.common.keys import Keys from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.support.ui import WebDriverWait from webdriver_manager.chrome import ChromeDriverManager import time from selenium.webdriver.common.keys import Keys from fractions import Fraction from pybettor import convert_odds from pybettor import implied_prob def driver_uniBet(): options = ChromeOptions() service = Service(executable_path=r'YOUR PATH HERE') useragentarray = [ "Mozilla/5.0 (Linux; Android 13) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.5672.76 Mobile Safari/537.36" ] options.add_argument("--disable-blink-features=AutomationControlled") options.add_argument("--no-sandbox") options.add_argument("--disable-dev-shm-usage") options.add_argument("--disable-search-engine-choice-screen") # options.add_argument(f"--user-data-dir=./profile{driver_num}") options.add_experimental_option("excludeSwitches", ["enable-automation"]) options.add_experimental_option("useAutomationExtension", False) options.add_argument("disable-infobars") options.add_argument("disable-blink-features=AutomationControlled") driver = webdriver.Chrome( options=options ) driver.execute_script( "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})" ) driver.execute_cdp_cmd( "Network.setUserAgentOverride", {"userAgent": useragentarray[0]} ) options.add_argument("--disable-popup-blocking") # driver.execute_script( # """setTimeout(() => window.location.href="https://www.bet365.com.au", 100)""" # ) driver.get("https://www.unibet.ie/betting/sports/filter/football/england/premier_league/all/matches") time.sleep(1) return driver driver = driver_uniBet() buttons = driver.find_elements( By.XPATH, "/html/body/div[13]/div[2]/div/div/div[2]/div[1]/div/button[2]" ) buttons[0].click() playersFinal = [] oddsFinal = [] marketsFinal = [] fixturesFinal = [] games = driver.find_elements( By.CSS_SELECTOR, "._20e33" ) lengthGames = 10 for i in range(lengthGames): time.sleep(2) games = driver.find_elements( By.CSS_SELECTOR, "._20e33" ) games[i].click() time.sleep(2) try: tabs = driver.find_elements( By.CSS_SELECTOR, ".KambiBC-filter-menu__option" ) for i in tabs: if(i.text == "Player Shots on Target"): i.click() time.sleep(2) playersList = [] players = driver.find_elements( By.CSS_SELECTOR, ".KambiBC-outcomes-list__row-header.KambiBC-outcomes-list__row-header--participant" ) fixture = driver.find_elements( By.CSS_SELECTOR, ".fc699" ) for i in players: if(i.text != " "): playersList.append(i.text) market = driver.find_elements( By.CSS_SELECTOR, ".sc-eqUAAy.kLwvTb" ) odds = driver.find_elements( By.CSS_SELECTOR, ".sc-gsFSXq.dqtSKK" ) playerIndexOver = -1 playerIndexUnder = -1 for i in range(len(market)): overOrUnder = market[i].text.splitlines()[0] line = market[i].text.splitlines()[1] if(line == "0.5" and overOrUnder == "Over"): playerIndexOver+=1 playersFinal.append(playersList[playerIndexOver]) oddsFinal.append(odds[i].text) marketsFinal.append(overOrUnder + " " + line) fixturesFinal.append(fixture[0].text) elif(overOrUnder == "Over"): playersFinal.append(playersList[playerIndexOver]) oddsFinal.append(odds[i].text) marketsFinal.append(overOrUnder + " " + line) fixturesFinal.append(fixture[0].text) elif(line == "0.5" and overOrUnder == "Under"): playerIndexUnder+=1 playersFinal.append(playersList[playerIndexUnder]) oddsFinal.append(odds[i].text) marketsFinal.append(overOrUnder + " " + line) fixturesFinal.append(fixture[0].text) else: playersFinal.append(playersList[playerIndexUnder]) oddsFinal.append(odds[i].text) marketsFinal.append(overOrUnder + " " + line) fixturesFinal.append(fixture[0].text) time.sleep(2) driver.get("https://www.unibet.ie/betting/sports/filter/football/england/premier_league/all/matches") except: driver.get("https://www.unibet.ie/betting/sports/filter/football/england/premier_league/all/matches") API_URL = 'SUPABASE PROJECT URL' API_KEY = 'SUPABASE PROJECT KEY' supabase = create_client(API_URL, API_KEY) supabase for i in range(len(playersFinal)): playerName = playersFinal[i] fixture = fixturesFinal[i] odds = oddsFinal[i] marketName = marketsFinal[i] currentTime = datetime.today().strftime('%Y-%m-%d %H:%M:%S') try: response = supabase.table('SoTUniBet').insert({"fixture":fixture, "playerName": playerName, "selection": marketName, "odds": odds, "timeScraped" : currentTime }).execute() print(f"Successfully Added New Odds Scraped For {playerName} Selection: {marketName} Odds: {odds}") except Exception as e: print(e)Examine each scraper carefully to ensure that they are functioning correctly on your side. Additionally, take a look at your Supabase tables; they should be filled with data similar to the examples shown below.

Thank you for taking the time to read today's piece. If you found it enjoyable, please give it a clap and consider following for more insightful content in the future!

References

Intro to Web Scraping: Build Your First Scraper in 5 Minutes | by Joe Osborne

Create a new folder on your machine and a new .js or .py file inside the folder. Let's name them scraper-python.py and scraper-javascript.js .

Source: MediumBuilding a Web Scraper from start to finish

First, there's the raw HTML data that's out there on the web. Next, we use a program we create in Python to scrape/collect the data we want. ...

Source: HackerNoonHow to Use Python to Build Your Own Web Scraper

How to Build a Web Scraper with Python · Step 1: Import Necessary Libraries · Step 2: Define the Base URL and CSV Headers · Step 3: Create a ...

Source: freeCodeCampBeautiful Soup: Build a Web Scraper With Python

Beautiful Soup: Build a Web Scraper With Python · Step 1: Inspect Your Data Source · Step 2: Scrape HTML Content From a Page · Step 3: Parse ...

Source: Real PythonWanting to build a web scraper with no prior coding knowledge. Where do I ...

Hi there. I’m looking to build a web scraper to monitor various online UK retailer’s websites (Amazon UK, AO, Smyths, GAME, Very, and various others) ...

Source: Reddit7 Easy Steps for Creating Your Own Web Scraper Using Python

Create Your Own Web Scraper · 1. Choose the page you want to scrape · 2. Inspect the website code · 3. Find the data you want to extract · 4. Prepare the ...

Source: Better ProgrammingHow to build a web scraper: A beginner's guide

Web scraping using Python · 1. Install Python and Necessary Libraries · 2. Request the HTML Content · 3. Parse the Relevant Data · 4. Scrape ...

Source: SerpApiBuild a web scraper with Go

In this tutorial, we will walk through the process of building a web scraper using the Colly library in the Go programming language. This ...

Source: DEV Community

ALL

ALL sports

sports

Discussions